----

Building Resilient Infrastructure with Nomad: Scheduling and Self-Healing

// Hashicorp Blog

This is the second post in our series Building Resilient Infrastructure with Nomad. In this series we explore how Nomad handles unexpected failures, outages, and routine maintenance of cluster infrastructure, often without operator intervention required.

In this post we'll look at how the Nomad client enables fast and accurate scheduling as well as self-healing through driver health checks and liveness heartbeats.

Nomad client agent

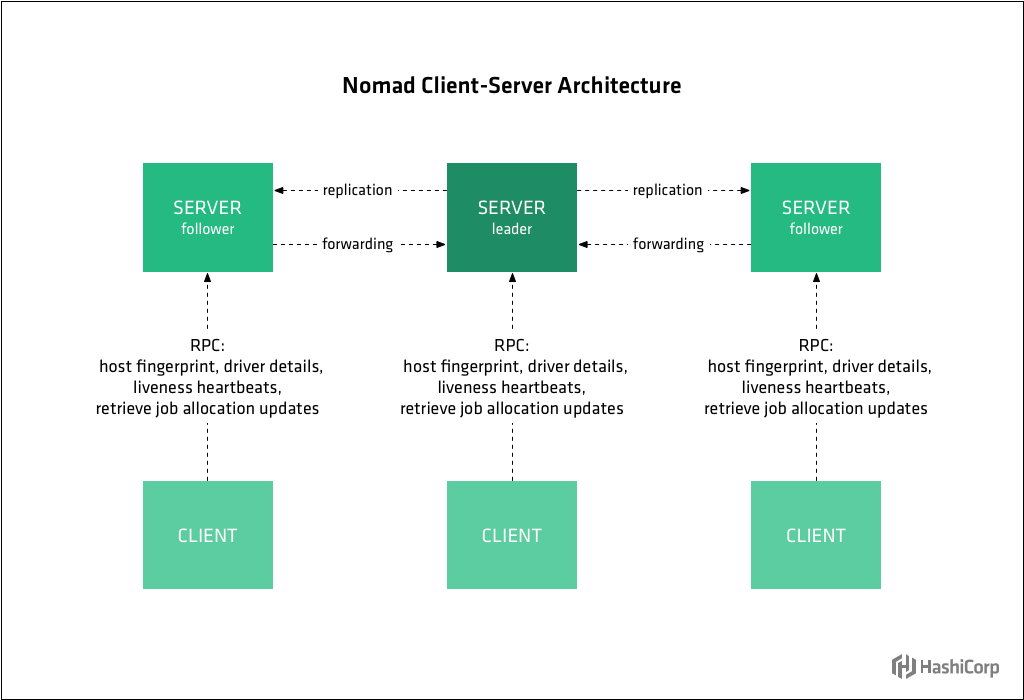

The Nomad agent is a long running process which runs on every machine that is part of the Nomad cluster. The behavior of the agent depends on if it is running in client or server mode. Clients are responsible for running tasks, while servers are responsible for managing the cluster. Each cluster has usually 3 or 5 server node agents and potentially thousands of clients.

The primary purpose of client mode agents is to run user workloads such as docker containers. To enable this the client will fingerprint its environment to determine the capabilities and resources of the host machine, and also to determine what drivers are available. Once this is done, clients register with servers and continue to check in with them regularly in order to provide the node information, heartbeat to provide liveness, and run any tasks assigned to them.

Scheduling

Scheduling is a core function of Nomad servers. It is the process of assigning tasks from jobs to client machines. This process must respect the constraints as declared in the job file, and optimize for resource utilization.

You'll recall from Part 1 of this series that a job is a declarative description of tasks, including their constraints and resources required. Jobs are submitted by users and represent a desired state. The mapping of a task group in a job to clients is done using allocations. An allocation declares that a set of tasks in a job should be run on a particular node. Scheduling is the process of determining the appropriate allocations and is done as part of an evaluation.

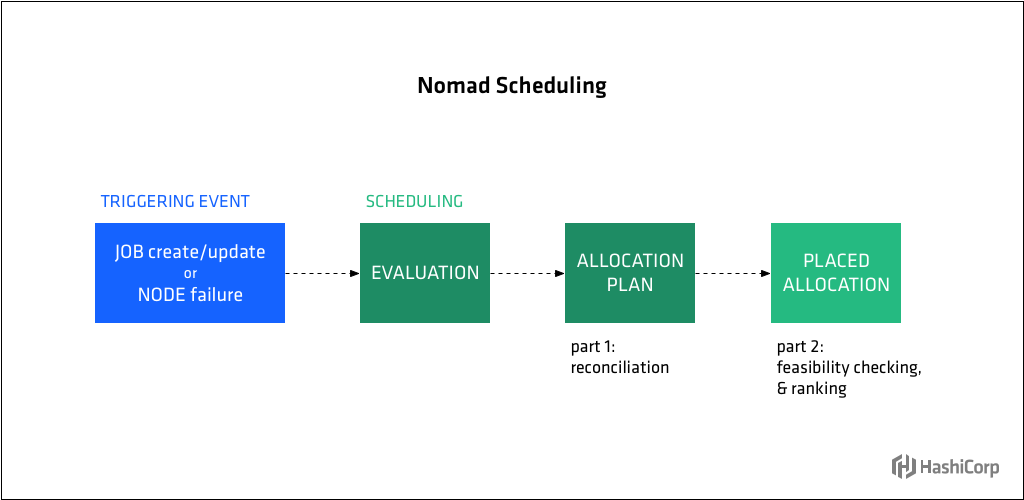

Evaluations are created when job is created, updated, or a node fails.

Schedulers, part of the Nomad server, are responsible for processing evaluations and generating allocation plans. There are three scheduler types in Nomad, each optimized for a specific type of workload: service, batch, and system.

First the scheduler reconciles the desired state (indicated by the job file) with the real state of the cluster to determine what must be done. New allocations may need to be placed. Existing allocations may need to be updated, migrated, or stopped.

Placing allocations is split into two distinct phases: feasibility checking and ranking. In the first phase the scheduler finds nodes that are feasible by filtering unhealthy nodes, those missing necessary drivers, and those failing the specified constraints for the job. This is where Nomad uses the node fingerprinting and driver information provided by Nomad clients.

The second phase is ranking, where the scheduler scores feasible nodes to find the best fit. Scoring is based on a combination of bin packing and anti-affinity (co-locating multiple instances of a task group is discouraged) which optimizes for density while reducing the liklihood of correlated failures. In Nomad 0.9.0, the next major release, scoring will also take into consideration user-specified affinities and anti-affinities

In a traditional data center environment where and how to place workloads is typically a manual operation requiring decision-making and intervention by an operator. With Nomad, scheduling decisions are automatic and are optimized for the desired workload and the present state and capabilities of the cluster.

Limiting job placement based on driver health

Task drivers are used by Nomad clients to execute tasks and provide resource isolation. Nomad provides an extensible set of tasks drivers in order to support a broad set of workloads across all major operating systems. Tasks drivers vary in their configuration options, environments they can be used in, and resource isolation mechanisms available.

The types of task drivers in Nomad are: Docker, isolated fork/exec, Java, LXC, Qemu, raw fork/exec, Rkt, and custom drivers written in Go (pluggable driver system coming soon in Nomad 0.9.0).

Driver health checking capabilities, introduced in Nomad 0.8, enable Nomad to limit placement of allocations based on driver health status and by surfacing driver health status to operators. For task drivers that support health-checking, Nomad will exclude allocating jobs to nodes whose drivers are reported as unhealthy.

Healing from lost client nodes

While the Nomad client is running, it performs heartbeating with servers to maintain liveness. If the heartbeats fail, the Nomad servers assume the client node has failed, and they stop assigning new tasks and start creating replacement allocations. It is impossible to distinguish between a network failure and a Nomad agent crash, so both cases are handled the same. Once the network recovers or a crashed agent restarts, the node status will be updated and normal operation resumed.

Limiting job placement based on driver health and automatically detecting failed client nodes and recheduling jobs accordingly are two self-healing features of Nomad that occur without the need for additional monitoring, scripting, or other operator intervention.

Summary

In this second post in our series on Building Resilient Infrastructure with Nomad (part 1), we covered how the Nomad client-server agents enable fast and accurate scheduling as well as self-healing through driver health checks and liveness heartbeats.

Nomad client agents are responsible for determining the resources and capabilities of their hosts, including which drivers are available, and for running tasks. Nomad server agents are responsible for maintaining cluster state and for scheduling tasks. Client and server agents work together to enable fast, accurate scheduling as well as self-healing actions such as automatic rescheduling of tasks off failed nodes and marking nodes with failing drivers as ineligible to receive tasks requiring those drivers.

In the next post, we'll look at how Nomad helps operators manage the Job Lifecycle: updates, rolling deployments, including canary and blue-green deployments, as well as migrating tasks as part of client node decommissioning.

----

Read in my feedly

No comments:

Post a Comment