HashiCorp and Microsoft have partnered to create Terraform modules that follow Microsoft's Azure Well-Architected Framework and best practices. In previous blog posts, we’ve demonstrated how to build a secure Azure reference architecture and deploy securely into Azure with HashiCorp Terraform and Vault, as well as how to manage post-deployment operations. This post looks at how HashiCorp and Microsoft have created building blocks that allow you to repeatedly, securely, and cost-effectively accelerate AI adoption on Azure with Terraform. Specifically, it covers how to do this by using Terraform to provision Azure OpenAI services.

The state of artificial intelligence

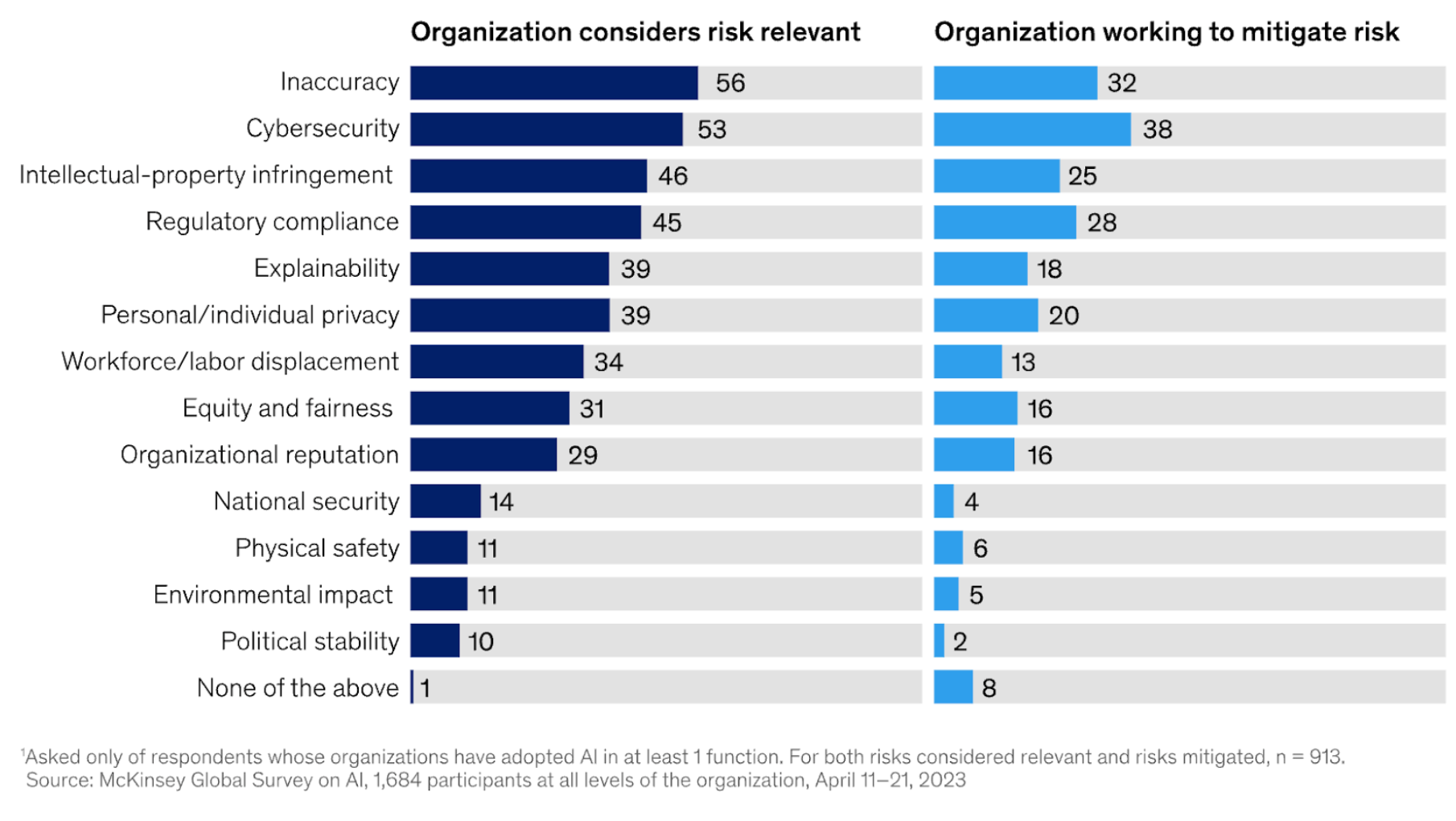

In its report on the state of AI in 2023, McKinsey states that “AI has risen from a topic relegated to tech employees to a focus of company leaders: nearly one-quarter of surveyed C-suite executives say they are personally using generative AI tools for work, and more than one-quarter of respondents from companies using AI say generative AI is already on their boards’ agendas.” The report also highlights the potential risks from AI, including cybersecurity, compliance, re-skilling, and inaccuracy.

In this post, we use Terraform to solve some of the challenges mentioned, such as security and compliance, while looking at key AI use cases and their deployments on the Azure platform.

Artificial intelligence building blocks

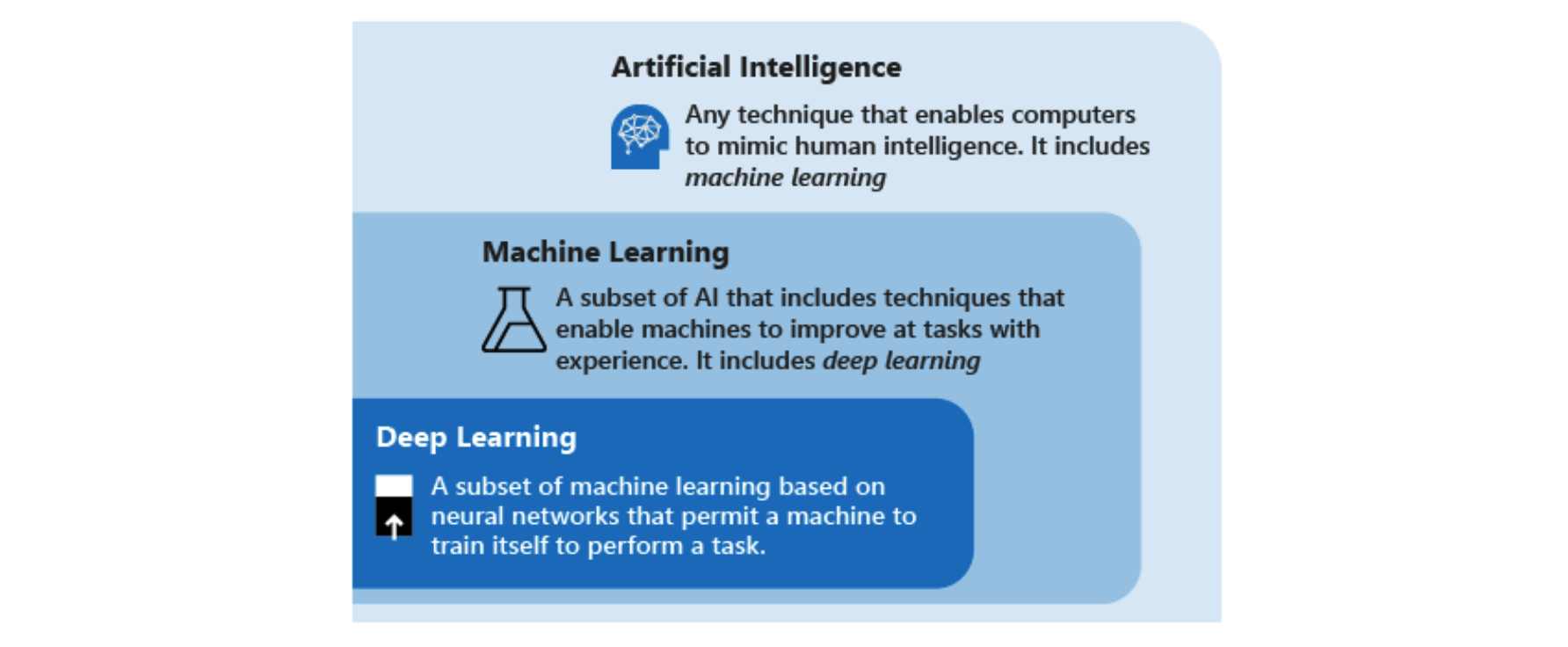

Before you dive into AI, you’ll want to understand how two key subtopics — machine learning and deep learning — fit under it. The diagram below shows the relationship between AI, machine learning, and deep learning.

Generative AI is a subset of AI that was recently popularized by Open AI’s ChatGPT and falls into the category of deep learning. This encompasses the ability of a computer to simulate human-like behavior and create new content.

OpenAI is a project that uses data sets called large language models (LLMs), which OpenAI has provided an interface to via ChatGPT. The model is the “engine” of deep learning algorithms, capable of recognizing, summarizing, translating, predicting, and generating text and other content.

Azure OpenAI and AI Services

Azure AI services is Microsoft's suite of out-of-the-box and customizable AI tools that fall under machine learning. They provide a wide range of functionality, including natural language processing, computer vision, and speech recognition. These services let organizations extract insights from data, automate processes, enhance customer experiences, and make more informed decisions through the integration of intelligent algorithms and models into their applications and workflows.

Azure OpenAI Service focuses on applying LLMs and generative AI to a variety of use cases. Azure OpenAI Service provides REST API access to OpenAI's powerful language models including the GPT-4, GPT-35-Turbo, and Embeddings model series.

Accelerated OpenAI and Azure AI Service deployments

Terraform modules are collections of configuration files located within a single directory. These modules serve to encapsulate sets of resources designed for specific tasks, streamlining the development process by minimizing the amount of code required for similar infrastructure components. Leveraging Terraform modules can significantly expedite the deployment of an AI service on Azure.

The Terraform module for deploying Azure OpenAI Service is a community-built module that has been developed to quickly deploy the Azure OpenAI Service with Terraform. Additional modules for Microsoft Azure can be found in the Terraform Registry.

Open AI

Many customers want to learn more about getting started with OpenAI. A quickstart for kicking this off is to use a pre-built module like the Terraform module for Azure OpenAI. As you get more familiar with the module and your unique requirements, you can construct your own module based on this module to achieve a custom starting point.

Using the simple code snippet below, you can leverage the module to deploy an environment that takes advantage of the GPT or DALL-E (which is an AI system that can create realistic images and art from a description in natural language). (Please note that to use this your Azure subscription has to apply for and be approved to use OpenAI.)

terraform {required_providers { azurerm = { source = "hashicorp/azurerm" version = "~> 3.0"}provider "azurerm" { features {}}resource "azurerm_resource_group" "rg" { name = "OpenAI" location = "East US"}module "openai" { depends_on = [ azurerm_resource_group.rg ] Source = "Azure/openai/azurerm" Version = "0.1.1" location = azurerm_resource_group.rg.location resource_group_name = azurerm_resource_group.rg.name}Microsoft tech community examples

Microsoft's technical community has developed content that can help you understand AI use cases and provides code examples. Here are two that cover OpenAI integrations, one for a specific application integration with Azure Kubernetes Service (AKS) and the second focusing more on creating a repeatable landing zone

Deploy an Azure OpenAI/ChatGPT app on AKS with Terraform

Here’s an example of how to Deploy and run a Azure OpenAI/ChatGPT app on AKS with Terraform. This sample shows how to deploy anAKS cluster and Azure OpenAI Service using Terraform modules with the Azure provider. It uses the Terraform provider to deploy a Python chatbot that authenticates against Azure OpenAI using Azure AD workload identity and calls the Chat Completion API of a ChatGPT model.

Deploy an Azure AI landing zone with Terraform

Using Terraform as the Infrastructure as Code tool, you can deploy the Azure OpenAI in a repeatable way leveraging a Landing Zone architecture. This means that customer get access to best practices from the Azure Cloud Adoption Framework (CAF), a comprehensive guide by Microsoft to ensure successful cloud adoption and alignment with business goals. Combining the landing zone construct and the resources it builds, organizations can create a repeatable pattern that accelerates their AI initiatives and simplifies the building of Azure services.

In this article, Microsoft explores in more detail how to deploy the Azure OpenAI landing zone architecture.

Azure AI Services

Customers are trying to understand how to scale AI in their organization. This encompasses a wide range of services including machine learning. The Azure Machine Learning workspace is the top-level resource for Azure Machine Learning, providing a centralized place to work with all the artifacts you create when you use Azure Machine Learning.

The workspace keeps a history of all jobs, including logs, metrics, output, and a snapshot of your scripts. The workspace stores references to resources like data stores and compute. It also holds all assets, including models, environments, components and data assets, making it easy to collaborate with colleagues to create machine learning artifacts and group-related work.

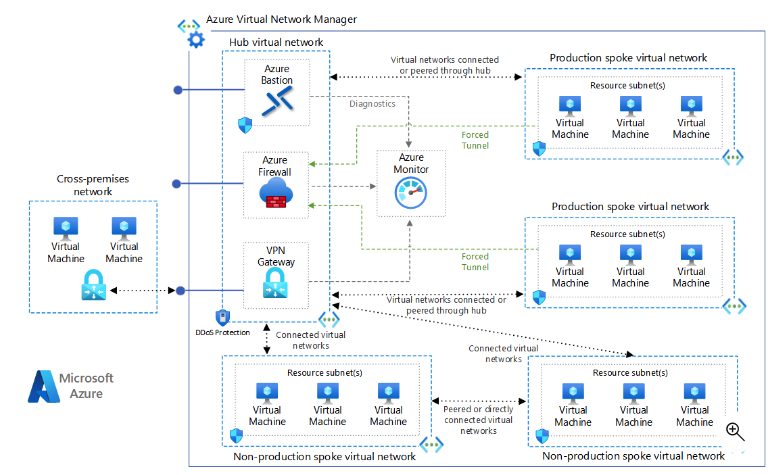

The Hub-spoke network topology in Azure is a reference architecture that implements a pattern where the hub-virtual network acts as a central point of connectivity to many spoke virtual networks. The spoke virtual networks connect with the hub and can be used to isolate workloads. You can also enable cross-premises scenarios by using the hub to connect to on-premises networks. If you want to start with a bare hub and spoke deployment, the code can be found here.

Download a Visio file of this architecture from Microsoft

Using the code that creates an Azure machine learning workspace, you can combine it with the secure hub-and-spoke environment above to create a collaborative shared and secure environment across your organization.

# Dependent resources for Azure Machine Learningresource "azurerm_application_insights" "default" { name = "${random_pet.prefix.id}-appi" location = azurerm_resource_group.default.location resource_group_name = azurerm_resource_group.default.name application_type = "web"}resource "azurerm_key_vault" "default" { name = "${var.prefix}${var.environment}${random_integer.suffix.result}kv" location = azurerm_resource_group.default.location resource_group_name = azurerm_resource_group.default.name tenant_id = data.azurerm_client_config.current.tenant_id sku_name = "premium" purge_protection_enabled = false}resource "azurerm_storage_account" "default" { name = "${var.prefix}${var.environment}${random_integer.suffix.result}st" location = azurerm_resource_group.default.location resource_group_name = azurerm_resource_group.default.name account_tier = "Standard" account_replication_type = "GRS" allow_nested_items_to_be_public = false}resource "azurerm_container_registry" "default" { name = "${var.prefix}${var.environment}${random_integer.suffix.result}cr" location = azurerm_resource_group.default.location resource_group_name = azurerm_resource_group.default.name sku = "Premium" admin_enabled = true}# Machine Learning workspaceresource "azurerm_machine_learning_workspace" "default" { name = "${random_pet.prefix.id}-mlw" location = azurerm_resource_group.default.location resource_group_name = azurerm_resource_group.default.name application_insights_id = azurerm_application_insights.default.id key_vault_id = azurerm_key_vault.default.id storage_account_id = azurerm_storage_account.default.id container_registry_id = azurerm_container_registry.default.id public_network_access_enabled = true identity { type = "SystemAssigned" }}Leveraging the base hub and spoke allows you to deploy Azure services in the hub, which offers access via API endpoints to resources in the spoke endpoints, making an “AI hub” that you can use to share the service with other virtual networks that users can access, build applications in, and experiment with, allowing for faster sharing of resources to create new business outcomes.

Secure Azure machine learning workspace

To get started with the full example, clone the repository change into the newly created directory, initialize Terraform within this new directory, and apply a build run. This will create a full secure hub-and-spoke Azure Machine Learning workspace.

Copy these commands in order:

➜ Code# Git clonehttps://github.com/dawright22/secure-azure-machine-learning-workspace.git➜ Code# cd secure-azure_machine_learning_workspace➜ Code# terraform init➜ Code# terraform plan ➜ Code# terraform applyAt this point, you should have a configuration that describes the full set of resources you require to get started with Azure machine learning in a network-isolated setup. This configuration creates new network components so please be aware of the costs.

You can now use Azure Bastion to securely connect to the Windows Data Science Virtual Machine (DSVM). With many popular data science tools pre-installed and pre-configured to jumpstart building intelligent applications for advanced analytics, the DSVM can set you up with the tools you need to start your machine learning journey.

Next steps

There are endless ways practitioners can use Terraform to scale the adoption of artificial intelligence in an Azure environment.. Be sure to check out our ongoing webinar series where HashiCorp and Microsoft cover how to build, deploy and secure resources.

from HashiCorp Blog https://bit.ly/45UeMhq

via IFTTT

No comments:

Post a Comment