Nowadays, you can hardly find a company with no backup or DR strategies in place. Data is becoming the most valuable organization’s asset so making sure it remains safe and available is becoming a key priority. But does it really matter where your backups are stored? Well, Veeam actually answered that question by bringing in the “3-2-1” backup rule meaning you should have at least 3 copies of your data, 2 of which are local but on different media and at least 1 copy offsite. Sounds reasonable.

What about the devices to store your backup data? The legend says tapes were first. Huge capacity, ability to keep data for a long time, but unfortunately slow. Disks! Great capacity, durability, and faster than tapes, but more expensive.

In my previous article, I’ve highlighted the cost of public cloud storage for your backups. So, cloud can perfectly become that “offsite” option for keeping your data safe. Moreover, you don’t have to worry about its maintenance since such large cloud providers as Amazon, Google, and Microsoft cover their infrastructure for good. Or do you?

Apparently, none of the cloud storage providers grants you 100% accessibility and security. Sure, they are getting closer to this number, but an unexpected power outage or something like that may knock out their services one day. This happened last year to Amazon S3 when the service had suffered from the erroneous activity. This year, S3 went down due to a power outage. Fortunately, Amazon did not lose their customers’ data, still their service was down. So, the key word in the 3-2-1 backup rule is “at least” one copy offsite. Thus, today, we’ll have a closer look at solutions gathering under one umbrella multiple public clouds support allowing you to keep several backup copies in different clouds.

What we, actually, are looking at

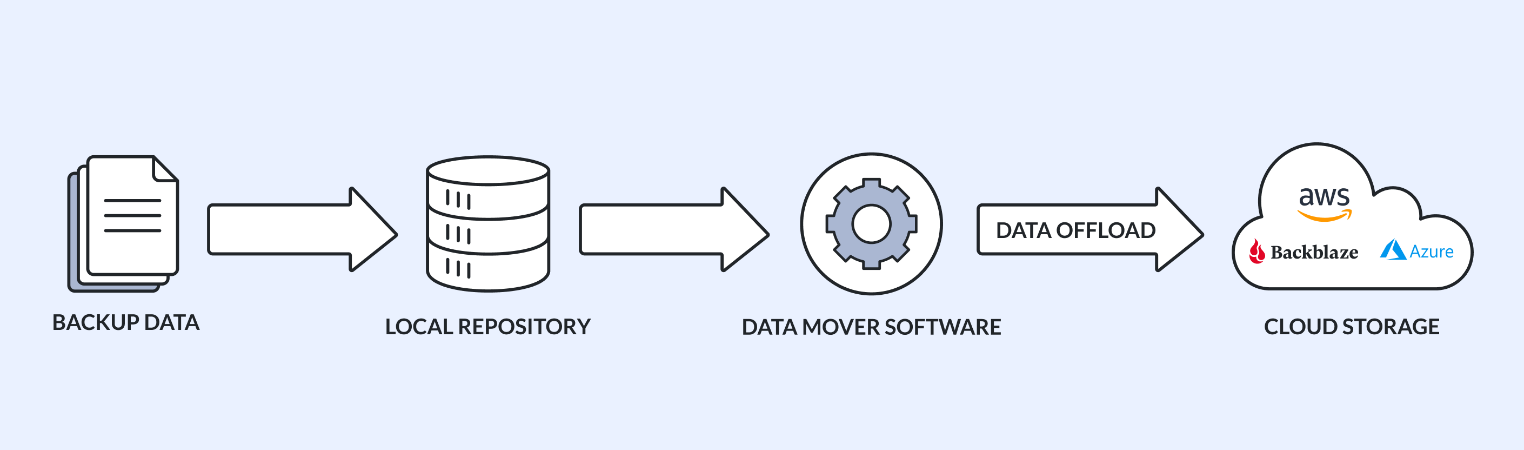

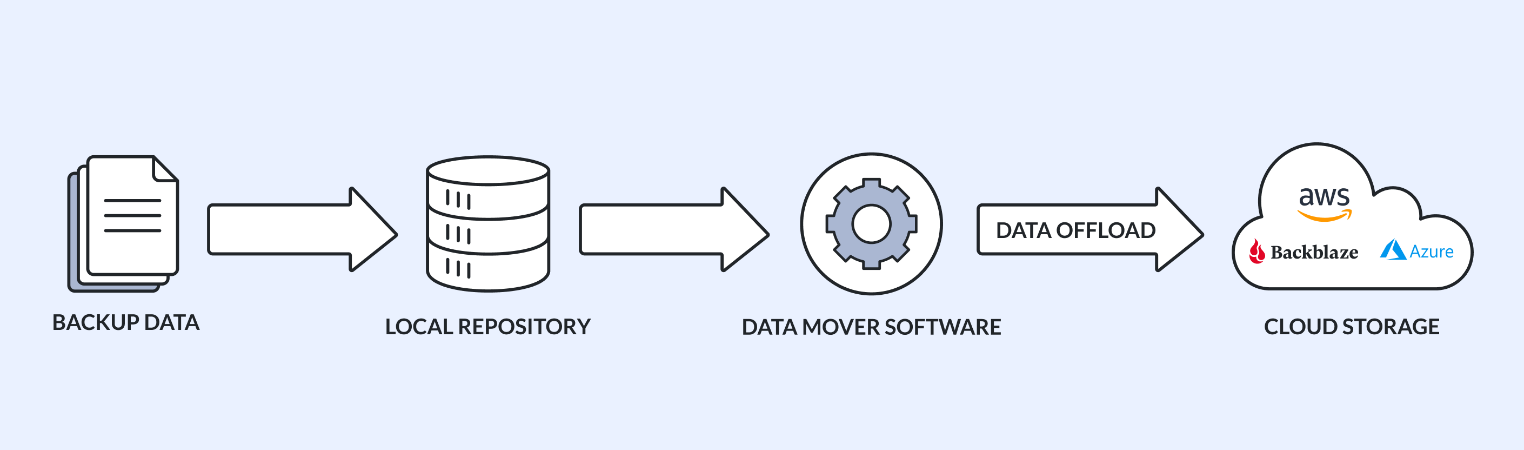

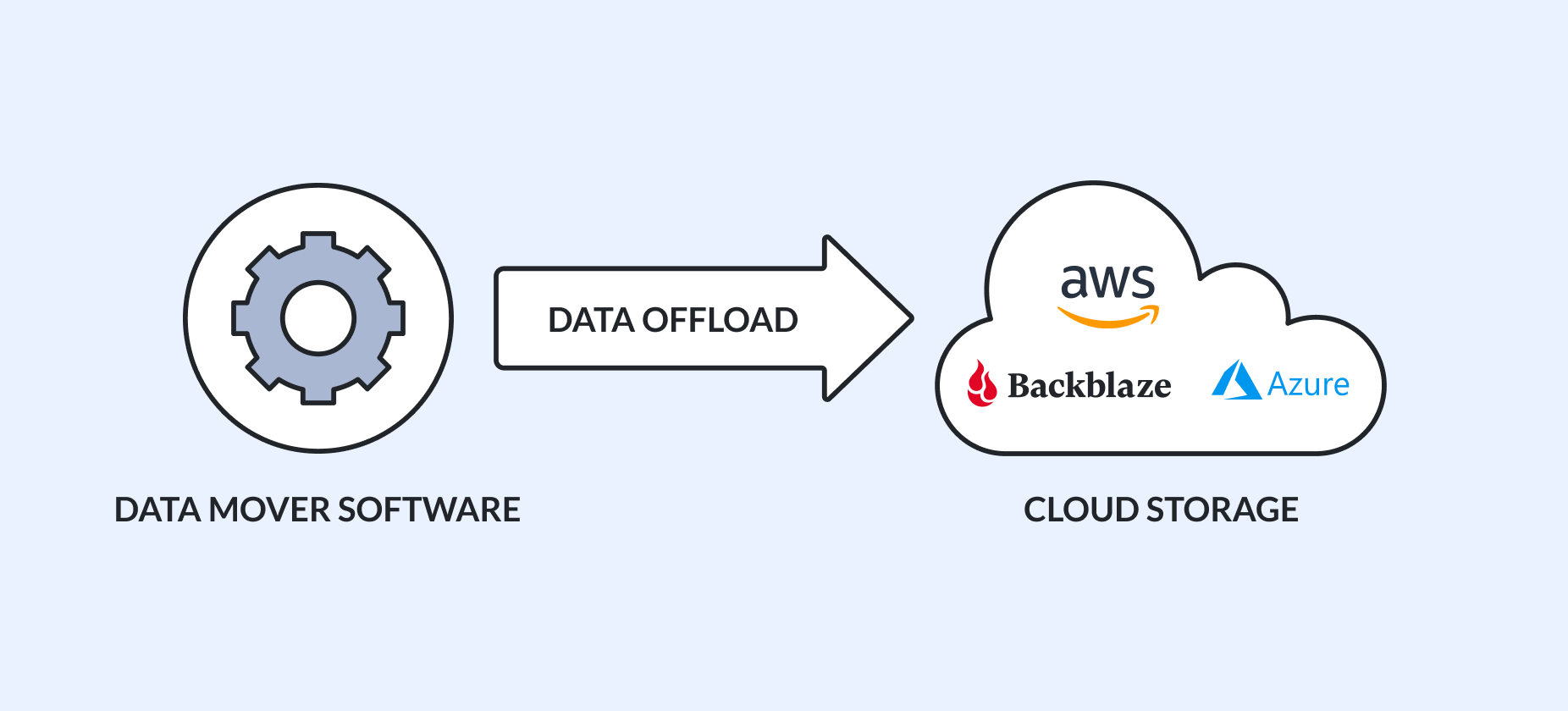

If you look carefully at the backup process, it, with the reasonable error margin, will look as it appears in the figure below:

Some solutions create backups and move it to the repository. There, data is kept for a while and then is shifted to the cloud where it stays as long as you need.

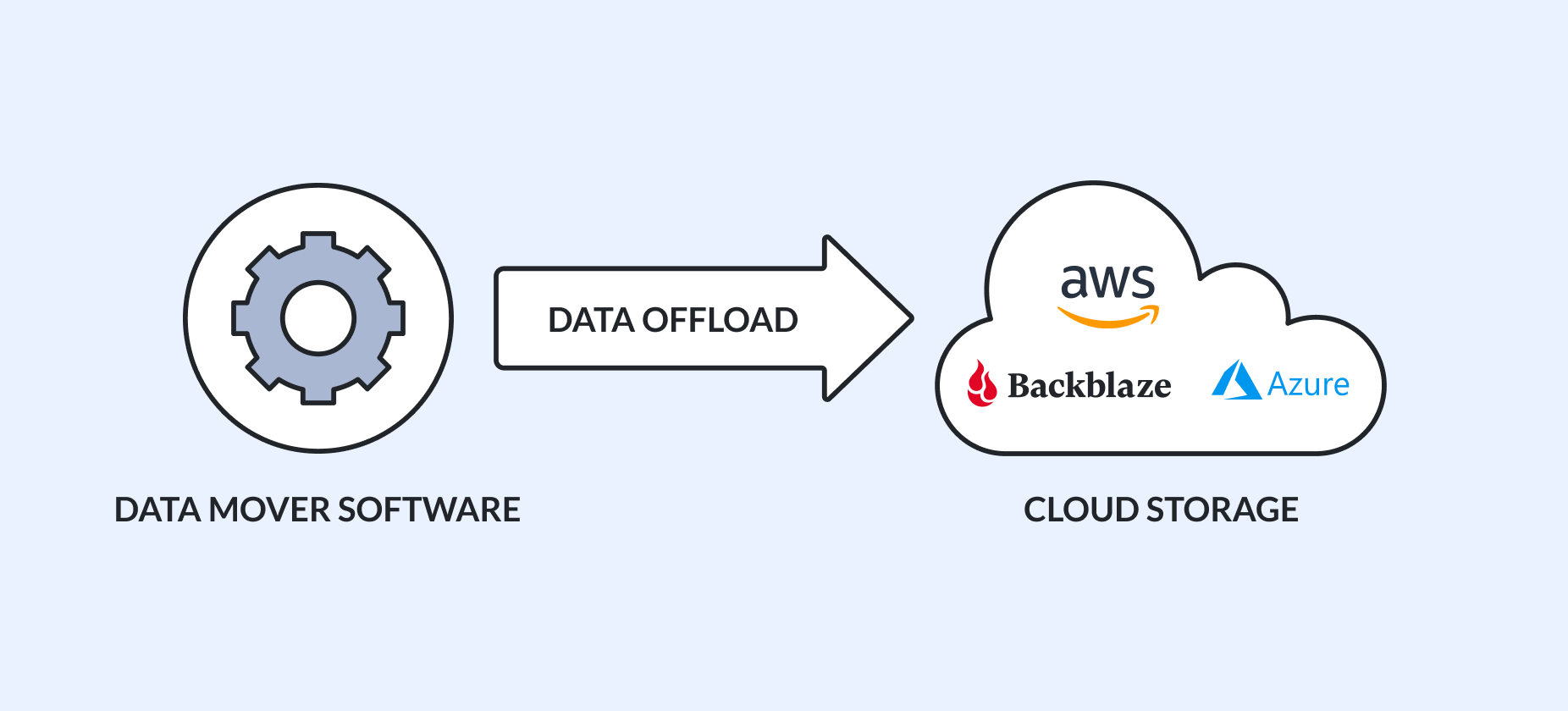

In this article, I rather discuss the dedicated software serving as a “data router”. In other words, the software involved in this process:

Now, let’s have a look at what we have on the table!

Rclone

Thinking about something that lets to back up your data to several clouds, Rclone and CloudBerry were the first solutions that popped up in my head. Rclone acts as a data mover synchronizing your local repository with cloud-based object storage. You, basically, create a backup using something else (i.e., Veeam Backup & Replication), allocate it on-premises, and the solution sends it to several clouds. Being first developed for Linux, Rclone has a command-line interface to sync files and directories between clouds.

OS compatibility

The solution can be run on all OS, but the command-line interface is kept.

Cloud Support

The solution works with most popular public cloud storage like Microsoft Azure, Amazon S3 and Glacier, Google Cloud Platform, Backblaze B2, etc.

Feature set

Rclone commands work wonderfully on whatever remote storage system, be it public cloud storage or just your backup server somewhere else. It can also send data to multiple places simultaneously, but bi-directional sync does not work yet. In other words, everything you make to your files in the cloud does not affect their local copies. The synchronization process is incremental on the file-by-file basis. It should also be noted that Rclone preserves timestamps on files, so you can find the right backup easy.

The solution provides two options for moving data to the cloud: sync and copy. The first one, sync, allows moving the backup to the cloud automatically as soon as they appear in the specified local directory. The second mode, copy, as it comes from its name, allows only copying data from on-premises to cloud. Deleting your files locally won’t affect the ones stored in the cloud. There’s also the check mode enables to verify hash equality. Learn more about Rclone: https://rclone.org/

CloudBerry Backup

CloudBerry Backup is built from the self-titled backup technology developed for service providers and enterprise IT departments. It is a cross-platform solution. Note that it’s full-fledged backup software, allowing you to not only move backups to the cloud but also create them.

OS compatibility

It is a cross-platform solution.

Cloud Support

So far, the solution can talk to Microsoft Azure, Amazon S3 and Glacier, Google Cloud Platform, Backblaze B2, and much more!

Feature set

Being intended for big IT departments and cloud service providers, CloudBerry Backup provides some features that make the solution really handy for these guys. First, it offers the room for the client customization up to the complete rebranding of the solution. Now, let’s look at the backup side of this thing!

The solution allows backing up files and directories manually. If you are too lazy for that, you can sync the selected directory to the root of the bucket. Also, CloudBerry Backup enables to schedule backups. Now, you won’t miss them! Another cool thing is backup jobs’ management and monitoring. Thanks to this feature you are always aware of backup processes on the client machines. The solution offers AES 256-bit end-to-end encryption to ensure your data safety.

Learn more about CloudBerry Backup: https://www.cloudberrylab.com/managed-backup.aspx

StarWind VTL

Have you ever heard about virtual tape libraries (VTL)? I thought these things died out, but I apparently was wrong.

OS compatibility

Unfortunately, this product is available only for Windows.

Cloud Support

So far, StarWind VTL can talk to popular cloud storages like AWS S3 and Glacier, Azure, and Backblaze B2.

Feature set

The product has many cool features for ones who want to back up to cloud. First, it allows sending data to the cloud’s respective tier with their further automatic de-staging. This automation makes StarWind VTL really cool. Second, the product supports both on-premises and public cloud object storages. Third, StarWind VTL, as well as solutions reviewed above, supports deduplication and compression making your storage utilization more efficient. Eventually, there’s a room for encryption options because the product allows client-side encryption.

StarWind VTL also has several inherent VTL features. This means that it, potentially, can give us more than just a thing that accepts your backups from something Veeam-like and throws them to the public cloud.

All manipulations in StarWind VTL environment are done via Management Console and Web-Console, the web-interface that makes VTL compatible with all browsers.

Learn more about StarWind Virtual Tape Library: https://www.starwindsoftware.com/starwind-virtual-tape-library

Duplicati

Duplicati is designed for online backups from scratch. Yes, it is one more today backup software allowing to send your data directly to multiple clouds. Duplicati also can use local storage as a backend.

OS compatibility

It is free and compatible with Windows, macOS, and Linux.

Cloud Support

So far, the solution talks to Amazon S3, Mega, Google Cloud Storage, and Azure.

Feature set

Duplicati has some awesome features. First, the solution is free. Notably, its team does not restrict using this software for free even for commercial purposes. Second, Duplicati employs decent encryption, compression, and deduplication making your storage more efficient and safe. Third, the solution adds timestamps to your files, so you can easier find the specific backup. Fourth, willing to make their users’ life simpler, Duplicati team has developed backup scheduler. Now, you won’t miss the backup time! The thing that makes this piece of software special and really handy is backup content verification. Indeed, you never know whether the backup works out until you literally back up from it. Thanks to this feature, you can pinpoint the broken backups before it gets too late.

The solution is orchestrated via the web interface, allowing you to run it from whatever browser.

Learn more about Duplicati: https://www.duplicati.com/

Duplicacy

Duplicacy is readily amenable to popular cloud storages. Apart from the cloud, it can use your SFTP servers and NAS boxes as its backends.

OS compatibility

The solution is compatible with Windows, Mac OS X, and Linux.

Cloud Support

So far, the Duplicacy can offload data to Backblaze B2, Amazon S3, Google Cloud Storage, Microsoft Azure, and much more!

Feature set

Duplicacy not only routes your backups to cloud but also creates them. Note that each backup created by this solution is incremental. Each of them is treated as a full snapshot, allowing simpler restoring, deletion and backups transition between storages. Duplicacy sends your files to multiple cloud storages and uses strong client-side encryption. Another cool thing about this solution is its ability to provide multiple clients with simultaneous access to the same storage.

Eventually, I’d like to mention Duplicacy’s comprehensive GUI that features one-page configuration for quick backup scheduling and managing retention policies. If you are a command-line interface fan, you can manage Duplicacy via the command line.

Learn more about Duplicacy: https://duplicacy.com/

So what?

Undoubtedly, keeping one copy in the public cloud is good. As far as it complies the 3-2-1 backup rule, everything should be wonderful. Yet, public cloud services fail and get messed up since none of them runs on a foolproof and outageproof infrastructure. If you are out of luck one day, the cloud service will go down at that very moment when you decide to retrieve your data. That day, it would be nice to have an extra backup in another cloud.

Sure, there are plenty of wonderful backup solutions that can talk to multiple public cloud storages. I shed light on them in this article. Some of them are full-fledged backup software (i.e., CloudBerry Backup, Duplicati, and Duplicacy), while others can just talk to multiple clouds. Among the reviewed today solutions, there’s also a product allowing creating virtual tape libraries. It is really awesome because it streamlines your backup environment bringing not only the ability to talk to multiple clouds but also some inherent VTL features. Hope, this article comes in handy, and you’ll employ one of the reviewed solutions in your backup infrastructure.

from StarWind Blog https://ift.tt/H46ErfL

via

IFTTT

A new report from inaugural Technology & Society Visiting Fellow Andrew McAfee looks at the potential economic impacts of generative AI.

A new report from inaugural Technology & Society Visiting Fellow Andrew McAfee looks at the potential economic impacts of generative AI.